The Valence VWS-173807702 is a mid-tower supporting 1x Intel Xeon W-2400 Series processor and 8x DDR5 memory slots.

-

AI & Deep Learning

Training, building, and deploying Deep Learning and AI models can solve complex problems with less coding. Whether it's data collection, annotation, training, or evaluation, leverage the immense parallelism GPUs offer to parse, train, and evaluate at extremely high throughput. Process massive datasets faster with multi-GPU configurations to develop AI models that surpass any other form of computing.

-

Life Sciences

Training, building, and deploying Deep Learning and AI models can solve complex problems with less coding. Whether it's data collection, annotation, training, or evaluation, leverage the immense parallelism GPUs offer to parse, train, and evaluate at extremely high throughput. Process massive datasets faster with multi-GPU configurations to develop AI models that surpass any other form of computing.

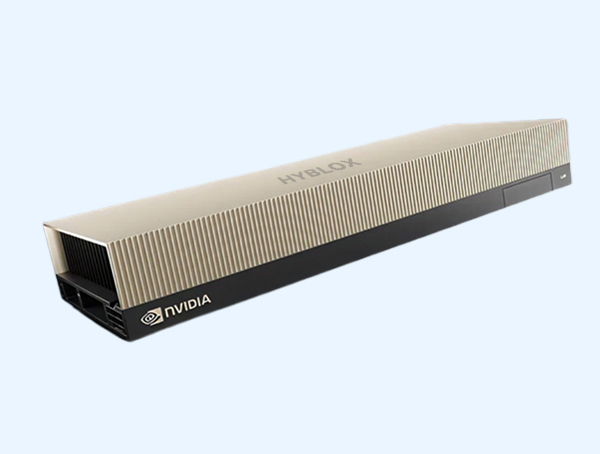

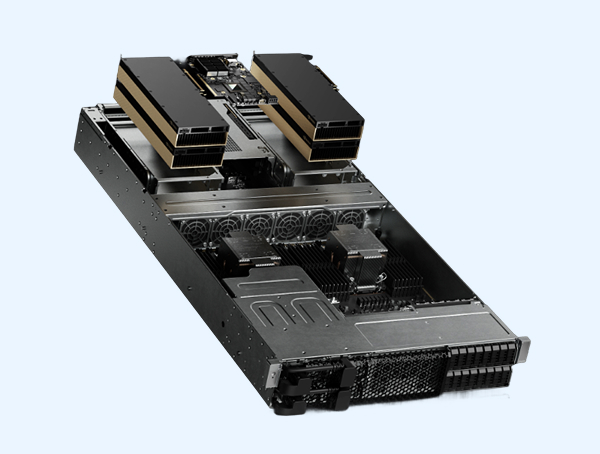

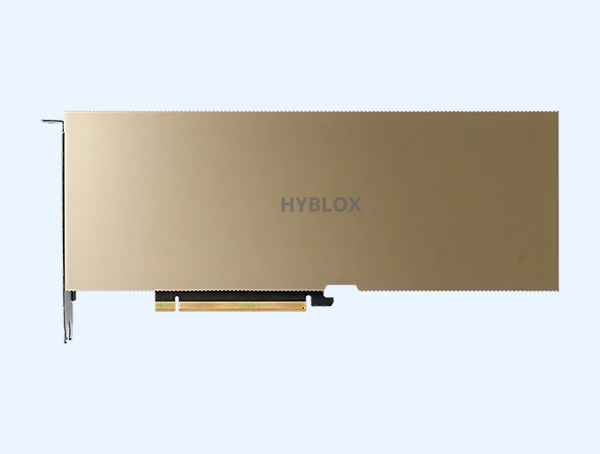

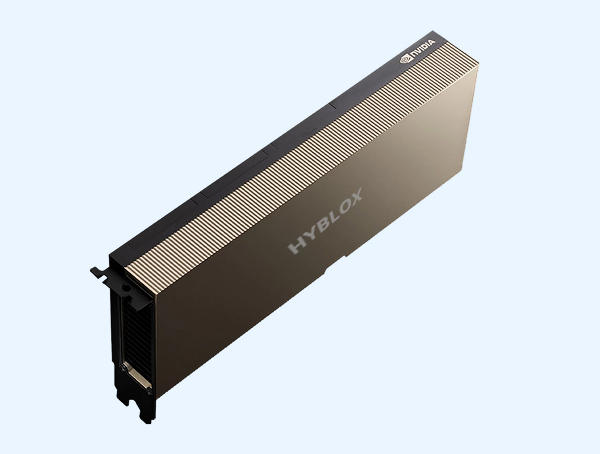

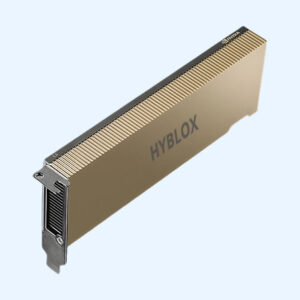

🚀 NVIDIA L40S – The Most Powerful Universal Data‑Center GPU

The NVIDIA L40S, based on Ada Lovelace architecture, offers groundbreaking multi‑workload acceleration across AI, graphics, and media processing—all from NVIDIA’s official servers.

🔹 Generative AI & LLM Acceleration

- Up to 1,466 TFLOPS Tensor Core performance (FP8 + sparsity)

- 48 GB GDDR6 ECC memory with ≈ 864 GB/s bandwidth

- 18,176 CUDA® cores, 568 4th‑gen Tensor Cores, 142 3rd‑gen RT Cores (~212 TFLOPS RT)

Specifications verified from NVIDIA product page and datasheet capabilities.

🔹 Graphics, Media & Virtualization

- Supports **Omniverse™, RTX™, vWS/vGPU** workflows with full virtualization support

- Hardware media engines: **3× NVENC + 3× NVDEC with AV1 support**

- Passive cooling, dual-slot PCIe Gen4 x16 form factor

🔹 Multi‑Instance GPU (MIG) & Enterprise Reliability

- Up to **7 isolated MIG slices per GPU

- NEBS‑Level 3 certified, **secure boot with Root of Trust**, ECC memory

- Designed for 24/7 operation in enterprise data centers

🔹 Performance Comparison vs Legacy GPUs

- ~**5× inference throughput** compared to NVIDIA A40

- **1.2–1.7× higher** training & inference performance vs HGX A100 systems

- Consolidates AI, graphics & media acceleration into a single cost-efficient platform

Why Choose the NVIDIA L40S for Your Data Center?

- Built for AI, visualization, and media in one universal GPU platform

- Enterprise-grade reliability with ECC RAM, secure firmware, and standard compliance

- Supports scalable GPU workloads across inference, rendering farms, virtual workstations, and more

Reviews

There are no reviews yet.